4.13. pyopus.optimizer.qpmads — Mesh adaptive direct search with quadratic programming¶

Mesh Adaptive Direct Search with quadratic programming (PyOPUS subsystem name: MADSOPT)

Mesh adaptive direct search based on [mads]. The 2n version uses orthogonal directions [madsort]. Handles infeasible initial points with the progressive barrier approach [madspb].

Supports uniform distribution of the search directions according to [unimads].

Builds and solves quadratic models based on [qpmads].

- mads

Audet C., Dennis Jr. J.E., Mesh Adaptive Direct Search Algorithms for Constrained Optimization. SIAM Journal on Optimization, vol. 17, pp. 188-217, 2006.

- madsort

Abramson M.A., Audet C., Dennis Jr. J.E., Le Digabel S., ORTHO-MADS: A Deterministic MADS Instance with Orthogonal Directions. SIAM Journal on Optimization, vol. 20, pp.948-966,2009.

- madspb

Audet C., Dennis Jr. J.E., A Progressive Barrier for Derivative-Free Nonlinear Programming. SIAM Journal on Optimization, vol. 20, pp. 445-472, 2009.

- unimads

Bűrmen Á., Tuma T., Generating Poll Directions for Mesh Adaptive Direct Search with Realizations of a Uniformly Distributed Random Orthogonal Matrix, Pacific Journal of Optimization, acepted for publication, 2015.

- qpmads

Bűrmen Á., Olenšek J., Tuma T., Mesh Adaptive Direct Search with Second Directional Derivative-Based Hessian Update, Computational Optimization and Applications, DOI: 10.1007/s10589-015-9753-5, 2015.

Not all member functions are documented because this module is under development.

-

class

pyopus.optimizer.qpmads.QPMADS(function, xlo=None, xhi=None, constraints=None, clo=None, chi=None, fc=None, cache=False, fgH=None, cJ=None, debug=0, fstop=None, maxiter=None, scaling=1.0, meshBase=4.0, initialMeshDensity=1.0, stepBase=2.0, startStep=1.0, maxStep=inf, stopStep=1e-12, irregularMesh=False, generator=2, qraugment=0, unifmat=0, protoset=1, rounding=1, sequenceReset=False, boundSnap=False, boundSlide=False, roundOnFinestMeshEntry=False, greedy=True, speculativePollSearch=True, fabstol=0.0, freltol=1.1102230246251565e-16, xabstol=0.0, xreltol=1.1102230246251565e-16, Habstol=None, Hreltol=None, HinitialDiag=0.0, model=True, linearUpdate=True, simplicalUpdate=False, simplicalUpdatePoisedness=True, pointHistory=(2, 1), minRegressionPoints=(1, 1), maxRegressionPoints=(1, 1), regressionDistNorm='L1', relativeSingularValueBound=True, boundStepMirroring=True, linearUpdateExtend=True, forceSimplicalUpdate=False, rho=4.0, lastDirectionOrdering=False, modelOrdering=True, normalizeCV=False, modelSearch=True, beta=0.001, hessianModification='eig', hessianModificationEigRelDelta=True, hessianModificationEigDelta=2.220446049250313e-16, rhoNeg=inf, rhoNegConstr=inf, rhoPos=inf, trType='MADS', qpFeasibilityRestoration=False, speculativeModelSearch=False, stepCutAffectsUpdate=True, speculativeStepAffectsUpdate=False, hmax=0.0, stretchHmax=True, qpAcceptAnyPosition=True)[source]¶ Mesh Adaptive Direct Search

See the

ConstrainedOptimizerfor the explanation of function, xlo, xhi, constraints, clo, chi, fc, cache, debug, fstop, and maxiter.fgH specifies a function that evaluates the function gradient and Hessian. The function should return them as a tuple with gradient as the first component and the Hessian as the second component.

cJ specifies a function that computes the Jacobian of the nonlinear constraints. The function returns a Numpy array with first index for the constraint and the second index for the optimization variable.

scaling is the scaling of the problem. If it is scalar, it applies to all components of the independent variable. If it is a vector scaling can be specified independently for every component.

meshBase is the base for constructing the mesh. Mesh densities are powers of this number.

initialMeshDensity specifies the density of the initial mesh. The mesh size is obtained by dividing the power of the meshBase with this number.

stepbase is the base for constructing the step size. Steps size is computed as some constant times a power of this number.

startStep is the initial step size. The exponents of the initial mesh and step size is chosen in such manner that the initial step closely approximates this number.

maxStep is the upper limit for step size.

stopStep is the step size at which the algorithm stops.

irregularMesh turns on irregular mesh implemented via a VP-tree.

generator is the direction generator method.

0 – OrthoMADS

1 – QRMADS

2 – UniMADS (uniformly distributed directions)

unifmat is the matrix construction method for QRMADS and UniMADS. This matrix is used as the input of the QR decomposition. nb is the smallest even number not greater than n.

0 – n independent random samples from \([0,1)^{nb}\)

1 – n samples from nb-dimensional Sobol sequence, advance generator by one sample

2 – 1 sample from nb-dimensional Sobol sequence and n random samples from \([0,1)^{nb}\)

3 – n+1 samples from nb-dimensional Sobol sequence, advance generator by one sample

4 – n+1 independent random samples from \([0,1)^{nb}\)

5 – one sample from nb(n+1)-dimensional Sobol sequence (take nb rows, n columns)

6 – one sample from nb(n+1)-dimensional Sobol sequence (take nb rows, n+1 columns)

qraugment is the matrix augmentation method for QRMADS. The first column is always transformed with the Box-Muller transformation resulting in a vector that (when normalized) is uniformly distributed on the unit sphere. The remaining columns are transformed in the following manner:

0 – matrix elements transformed to uniform distribution over [-1,1]

1 – matrix elements transformed to uniform distribution over [0,1]

2 – matrix elements transformed to normal distribution \(N(0,1)\)

3 – same as 2, except that the first column is fixed (the first element is 1, the remaining elements are 0)

qraugment does not affect UniMADS. For UniMADS all matrix elements are transformed to normal distribution \(N(0,1)\) before QR decomposition takes place.

protoset specifies the prototype set for UniMADS

0 – regular simplex with n+1 points

1 – n orthogonal vectors and their negatives

2 – n orthogonal vectors and the normalized negative sum of these vectors

rounding specifies the rounding mode for QRMADS and UniMADS.

0 – round to sphere

1 – round to hypercube

Two generators are created for generating random/Halton/Sobol sequences. The main generator is used for most iterations, while the auxiliary generator is used for the iterations involving the finest-yet meshes. If sequencereset is

Truethe main generator is reset to the state of the auxiliary generator whenever the finest-yet mesh is reached.Setting boundSlide to

Truesets the components of a point that violate bound to the value of the respective bound. This makes the point feasible.Setting boundSnap to

Trueshortens all steps that result in bound violation to such extent that the resulting point lies on the bound with largest violation. This makes the point feasible.Setting roundOnFinestMeshEntry to

Truerounds the first point examined on the finest-yet mesh to the nearest mesh point. Otherwise this point is not rounded.greedy turns on greedy evaluation in the poll step (stops the poll step as soon as a better point is found).

speculativePollSearch enables the speculative step after a successful poll step.

fabstol and freltol specify the absolute and the relative error of the computed function value used for estimating the error of the Hessian update.

xabstol and xreltol specify the absolute and the relative error of the point position used for estimating the error of the Hessian update.

Habstol and Hreltol specify the maximal absolute and relative error of the Hessian update for which the update is applied. Setting them to

Noneapplies the update regardless of the error.HinitialDiag specifies the diagonal elements of the initial hessian approximation. If this is a scalar all diagonal elements of the initial approximation are identical.

Setting model to

Trueturns on the construction of the quadratic model of the function and constraints.Setting linearUpdate to

Trueturns on Hessian updating based on three collinear points. This type of updating works well with protoset set to 1. With protoset=0 this update can be applied at certain steps (speculative search) but by itself is not sufficient for building a Hessian approximation with a reasonable number of function evaluations. It can, however, be used together with simplicalUpdate for protoset=0.Setting simplicalUpdate to

Trueturns on Hessian updating based on n+2 points. Works well for protoset set to 2.pointHistory is a tuple specifying the point history size. The point history length is computed as tuple[0]*n+tuple[1] where n is the problem dimension.

minRegressionPoints is a tuple specifying the minimum number of points required by the linear regression (excluding the origin point). The minimal number of points is computed as tuple[0]*n+tuple[1] where n is the problem dimension.

maxRegressionPoints is a tuple specifying the maximum number of points used by the linear regression (excluding the origin point). The maximal number of points is computed as tuple[0]*n+tuple[1] where n is the problem dimension.

regressionDistNorm sets the norm used for computing the point’s distance to determine whether the point is to be used in regression. can be set to

L1orL2.boundStepMirroring turns on mirroring of points that violate bounds.

linearUpdateExtend evaluates an additional expansion point if a point violates the bound. useful with protoset set to 1. If direction d violates bounds and -d does not an additional point along direction -2d is evaluated. This results in 3 collinear points that make it possible to apply the linearUpdate.

forceSimplicalUpdate forces a simplical update after every failed poll step (assuming that simplical update is enabled).

rho specifies the distance within which points are collected for regression.

lastDirectionOrdering enables the ordering of poll steps based on the last successful direction.

modelOrdering enables the ordering of poll steps based on the quadratic model.

modelSearch turns on quadratic model-based search (uses CVXOPT). This search is also referred to as the QP search.

beta is the parameter used in the algorithm for finding a positive definite Hessian.

rhoNeg is the trust region radius used when the Hessian is not positive definite and teh problem is unconstrained.

rhoNegConstr is the trust region radius used when the Hessian is not positive definite and the problem is constrained.

Setting qpFeasibilityRestoration to

Trueshrinks the QP computed step so that it satisfies the model constraints.speculativeModelSearch turns on the speculative step after a successful QP serach step.

hmax is the maximal constraint violation that will be accepted by the filter. This value is overridden by the initial point constraint violation if stretchHmax is enabled and the initial point constraint violation is greater than hmax.

Setting stretchHmax to

Truecauses the optimizer to replace hmax with the initial point constraint violation if the latter is greater than hmax.Setting qpAcceptAnyPosition will accept any point that is not dominated by the filter. Disabling this option makes QP step acceptace behave in the same manner as the poll step and the speculative search step.

Filtering is disabled by setting hmax to 0 and stretchHmax to

False.Setting hmax to 0, stretchHmax to

True, and qpAcceptAnyPosition toFalsewill use a simplified filtering approach where infeasible points are allowed and the filter comprises only the least infeasible point.If stepCutAffectsUpdate is enabled a step cut caused by bound sliding or bound snapping prevents the mesh and the step size from increasing, even when the step is successful.

If speculativeStepAffectsUpdate is enabled a rejected speculative step prevents the mesh and the step size from increasing.

See the

Optimizerclass for more information.-

check()[source]¶ Checks the optimization algorithm’s settings and raises an exception if something is wrong.

-

generatePollSteps(reduction=False)[source]¶ Generates a scaled and rounded set of poll steps.

- method can be

0 – original OrthoMADS 1 – QRMADS 2 – UniMADS 3 – 2 opposite vectors

-

gridRestrain(x, avoidOrigin=False)[source]¶ Returns a point restrained to the current grid. If avoidOrigin is

Truerounds away from the origin.The point is a normalized point (i.e. needs to be multiples by self.scaling to get the actual step).

-

modelOrderSteps(p, H, g, J=None, c=None, reverse=False)[source]¶ Order steps p according to quadratic model given by H and g.

If J and c are given linearized constraints of the form clo<=Jx+c<=chi are considered as the primary criterion for ordering.

If reverse is

Trueconsiders pairs of the forms (p, -p) first. After that the resulting points are ordered.Note that steps are normalized steps. The model applies to normalized steps. Actual steps are obtained by scaling p with self.scaling.

-

orderSteps(p, refDir, reverse=False)[source]¶ Orders the steps in ascending angle order with respect to refDir.

If reverse is True, steps are reversed when angle is greater than pi/2.

Note that steps are normalized steps. Actual steps are obtained by multiplying them with self.scaling.

-

qpAcceptAnyPosition¶ Parameters that will be removed in future versions:

Model search evaluates multiple trial points. It starts with the step predicted by the QP solver. If it fails the step is scaled with modelSearchShrinkFactor and retried.

modelSearchStepLimit specifies the number of trial points in the model search before it gives up and fails.

speculativeSteps specifies the number of speculative steps tried after a successful poll step. Every speculative step is twice longer than the last successful step.

-

reset(x0)[source]¶ Puts the optimizer in its initial state and sets the initial point to the 1-dimensional array x0. The length of the array becomes the dimension of the optimization problem (

ndimmember). The length of x must match that of xlo and xhi.

-

restoreFeasibility(x0, d, H=None, g=None, J=None, c=None, boundCheck=True, boundSlide=False, rounding=True)[source]¶ - Restore feasibility by shrinking d until x0 + d * self.scaling

satisfies bounds reduces the model (if H and g are given) satisfies linearized constraints (if J and c are given)

Note that the model applies to the normalized step.

Applies rounding to d if rounding is set to

True.Returns final scaled point, the used shrink factor a, and a flag indicating sliding was used.

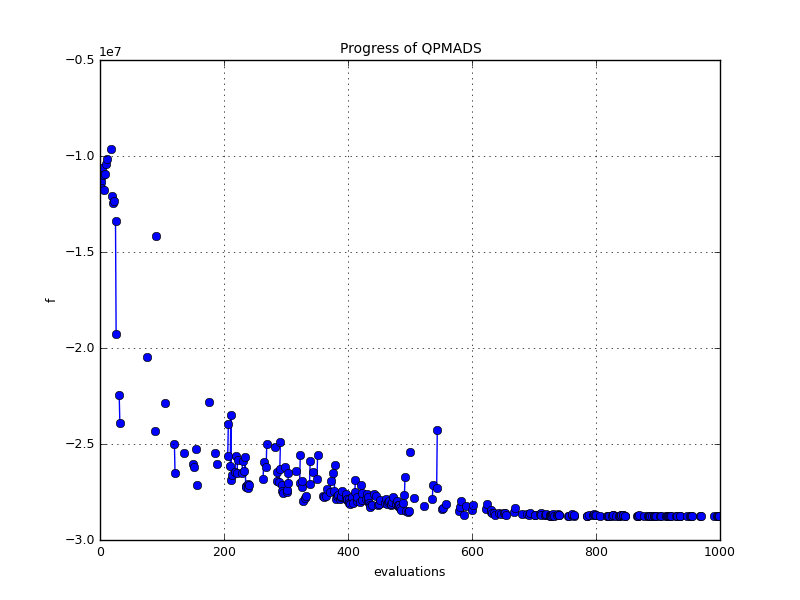

Example file qpmads.py in folder demo/optimizer/

# Optimize STYRENE function with QPMADS

# Collect cost function and plot progress

from pyopus.optimizer.qpmads import QPMADS

from pyopus.problems.madsprob import STYRENE

from pyopus.optimizer.base import Reporter, CostCollector

import numpy as np

from numpy import array, zeros, arange

from numpy.random import seed

from pyopus.plotter import interface as pyopl

if __name__=='__main__':

seed(0)

prob=STYRENE()

opt=QPMADS(

function=None,

fc=prob.fc, # f and c are evaluated simultaneously

xlo=prob.xl, xhi=prob.xh,

clo=prob.cl, chi=prob.ch,

debug=0, maxiter=1000

)

cc=CostCollector()

opt.installPlugin(cc)

opt.installPlugin(Reporter(onIterStep=100))

opt.reset(prob.initial)

opt.run()

cc.finalize()

f1=pyopl.figure()

pyopl.lock(True)

# If constraints are violated, no point is plotted

fval=np.where(cc.hval>0, np.nan, cc.fval)

if pyopl.alive(f1):

ax=f1.add_subplot(1,1,1)

ax.plot(arange(len(fval)), fval, '-o')

ax.set_xlabel('evaluations')

ax.set_ylabel('f')

ax.set_title('Progress of QPMADS')

ax.grid()

pyopl.draw(f1)

pyopl.lock(False)

print("x=%s f=%e" % (str(opt.x), opt.f))

pyopl.join()