10.6.4. Evaluation of opamp’s performance¶

We are going to set up the evaluation of a Miller opamp. For now we are not

going to use any of the pyopus.design module’s facilities. The script

from file 01-evaluator.py

in folder demo/design/miller/

# Advanced performance evaluator demo

# Import definitions

from definitions import *

# Import PerformanceEvaluator class

from pyopus.evaluator.performance import PerformanceEvaluator

# Import MPI support

# If MPI is imported an application not using MPI will behave correctly

# (i.e. only slot 0 will run the program) even when started with mpirun/mpiexec

from pyopus.parallel.mpi import MPI

# Import cooperative multitasking OS which will also take care of job distribution

from pyopus.parallel.cooperative import cOS

# Plotting support

from pyopus.plotter import interface as pyopl

# pprint for pretty printing data structures

from pprint import pprint

# pickle for loading results

import pickle

# NumPy

import numpy as np

if __name__=='__main__':

# Prepare statistical parameters dictionary with nominal values

nominalStat={ name: desc['dist'].mean() for name, desc in statParams.items() }

# Prepare operating parameters dictionary with nominal values

nominalOp={ name: desc['init'] for name, desc in opParams.items() }

# Prepare initial design parameters dictionary

initialDesign={ name: desc['init'] for name, desc in designParams.items() }

# Prepare one corner

# defined for all simulator setups

# module 'tm' definines the typical MOS models

# operating parameters are set to nominal values

corners={

'nom': {

'params': nominalOp,

'modules': ['tm']

}

}

# Prepare parallel evaluation environment, cOS will use MPI as its backend

# Remote tasks will be started in their own local folders. Before a task

# is started all files in the current folder of the machine that spawns a

# the remote task will be copied to that task's local folder (this is

# specified by the mirrorMap argument to MPI constructor).

cOS.setVM(MPI(mirrorMap={'*':'.'}))

# In definitions.py measures have no corners listed,

# Therefore they will be evaluated across all specified corners.

# The storeResults argument makes the evaluator store the waveforms

# in temporary result files in the system TMP folder (because resultsFolder

# is set to None) with prefix 'restemp_'

pe=PerformanceEvaluator(

heads, analyses, measures, corners, variables=variables,

storeResults=True, resultsFolder=None, resultsPrefix="restemp.",

debug=2

)

# Evaluate, pass initial design parameters and nominal statistical parameters

# to the netlist.

results, anCount = pe([initialDesign, nominalStat])

# Print formatted results (values of performance measures)

print(pe.formatResults(nMeasureName=10, nCornerName=15))

# Print the list of temporary pickled result files across hosts where

# evaluations took place. This is a dictionary with (host, (corner, analysis))

# for key. None for host means the results are stored on the local machine

# (i.e. the one that invoked the evaluator).

print("\nResult files across hosts")

pprint(pe.resFiles)

# Extract and print the set of hosts where evaluations took place

s=set()

for h, k in pe.resFiles.keys():

s.add(h)

print("\nSet of hosts where results are stored (None is localhost):", s)

# To access all result files we must first move them to the host where

# pe() was called. The files will be moved to the current directory

# (destination='.'). The collected files will have prefix 'res_'. Files

# will be moved (i.e. deleted from remote machines).

files={}

files=pe.collectResultFiles(destination=".", prefix="res.", move=True)

# Print names of pickled result files after they were moved here.

# This is a dictionary with (corner, analysis) for key.

print("\nCollected result files")

pprint(files)

# Result file with key ('nom', 'blank') in the above mentioned dictionary

# holds the environment where dependent measurements without were

# evaluated for the 'nom' corner. Load one and print the environment.

with open(files[('nom', 'blank')], 'rb') as f:

noneRes=pickle.load(f)

print("\n'blank' analysis measure evaluation environment")

pprint(noneRes.evalEnvironment())

# Load the ac analysis results and plot gain in dB vs frequency

with open(files[('nom', 'ac')], 'rb') as f:

acRes=pickle.load(f)

# Extract frequency scale and gain.

freq=np.real(acRes.scale())

gain=20*np.log10(np.abs(acRes.v('out')/acRes.v('inp', 'inn')))

# Plot gain.

f1=pyopl.figure(windowTitle="AC analysis", figpx=(600,400), dpi=100)

pyopl.lock(True)

if pyopl.alive(f1):

ax1=f1.add_axes((0.12,0.12,0.76,0.76))

ax1.semilogx(freq, gain, '-', label='gain [dB]', color=(1,0,0))

ax1.set_xlabel('f [Hz]')

ax1.set_ylabel('gain [dB]')

ax1.set_title('Gain vs frequency')

ax1.grid(True)

pyopl.draw(f1)

pyopl.lock(False)

# Wait for the user to close the plot environment control window.

pyopl.join()

# Delete collected result files

pe.deleteResultFiles()

# Cleanup temporary files generated by the evaluator

pe.finalize()

# Finalize cOS parallel environment

cOS.finalize()

The script first prepares dictionaries with nominal statistical parameter

values (0), nominal operating parameter values and initial design parameter

values. The nominal corner named nom is defined. This corner adds the

‘tm’ module to the input netlist for the simulator and specifies the nominal

values of operating parameters.

Next, the cooperative multitasking OS is initialized. It uses MPI as the backend and starts every task in its own local folder which contains the copies of all files from the current folder (mirrorMap argument to MPI).

Finally, a pyopus.evaluator.performance.PerformanceEvaluator

object is created from heads, analyses, measures, and

variables defined in

definitions.py

and the corners variable constructed in this script. The evaluator

will store the waveforms produced by the simulator in the local temporary

folder of the machine where the results were computed by a simulator.

The performance evaluator is then called (pe()) and the values of the

initial design parameters and nominal statistical parameters are passed.

These parameters are added to the input netlist for the simulator.

When the evaluation is finished the performance measures are printed

area | nom: 1.179e-09

cmrr | nom: 5.796e+01

gain | nom: 6.624e+01

gain_com | nom: 8.277e+00

gain_vdd | nom: -2.624e+01

gain_vss | nom: 3.777e+00

in1kmn2 | nom: 1.581e-16

in1kmn2id | nom: 1.581e-16

in1kmn2rd | nom: 0.000e+00

inoise1k | nom: 3.344e-14

isup | nom: 3.010e-04

onoise1k | nom: 3.337e-14

out_op | nom: 6.979e-03

overshdn | nom: 7.176e-02

overshup | nom: 8.924e-02

pm | nom: 7.004e+01

psrr_vdd | nom: 9.247e+01

psrr_vss | nom: 6.246e+01

slewdn | nom: 1.082e+07

slewup | nom: 1.006e+07

swing | nom: 1.474e+00

tsetdn | nom: 1.925e-07

tsetup | nom: 2.094e-07

ugbw | nom: 6.641e+06

vds_drv | nom: [0.58454814 0.8205568 0.433882 0.11164392 0.82752772 0.55567868 0.32221448 0.80099198]

vgs_drv | nom: [0.2121875 0.20869958 0.02675759 0.02674103 0.02677772 0.39175785 0.39175785 0.10682108]

Next, the list of stored waveform files and hosts where they are stored is printed

Result files across hosts

{(None, ('nom', 'ac')): '/tmp/restemp.calypso_7967_2.nom.ac.s8xost1h',

(None, ('nom', 'accom')): '/tmp/restemp.calypso_7967_6.nom.accom.fe5in9c1',

(None, ('nom', 'acvdd')): '/tmp/restemp.calypso_7967_5.nom.acvdd.j5xqaicj',

(None, ('nom', 'acvss')): '/tmp/restemp.calypso_7967_3.nom.acvss.qny1pj8d',

(None, ('nom', 'blank')): '/tmp/restemp.calypso_7967_1.nom.blank.1dgn84hu',

(None, ('nom', 'dc')): '/tmp/restemp.calypso_7967_2.nom.dc.9oion0n5',

(None, ('nom', 'noise')): '/tmp/restemp.calypso_7967_2.nom.noise.otzdr6la',

(None, ('nom', 'op')): '/tmp/restemp.calypso_7967_2.nom.op.ynjjg7io',

(None, ('nom', 'tran')): '/tmp/restemp.calypso_7967_2.nom.tran.0w17d3qc',

(None, ('nom', 'translew')): '/tmp/restemp.calypso_7967_4.nom.translew.u5d0kmli'}

A call to pyopus.evaluator.performance.PerformanceEvaluator.collectResultFiles()

is made. This moves the result files from temporary storage on respective hosts to the

current folder of the host that invoked the evaluator. The list of collected

files is printed

Collected result files

{('nom', 'ac'): 'res.nom.ac.pck',

('nom', 'accom'): 'res.nom.accom.pck',

('nom', 'acvdd'): 'res.nom.acvdd.pck',

('nom', 'acvss'): 'res.nom.acvss.pck',

('nom', 'blank'): 'res.nom.blank.pck',

('nom', 'dc'): 'res.nom.dc.pck',

('nom', 'noise'): 'res.nom.noise.pck',

('nom', 'op'): 'res.nom.op.pck',

('nom', 'tran'): 'res.nom.tran.pck',

('nom', 'translew'): 'res.nom.translew.pck'}

The file holding the environment in which the performance measures without an analysis were evaluated is loaded and the environment is printed

'blank' analysis measure evaluation environment

{'isNmos': [1, 1, 1, 1, 1, 0, 0, 0],

'm': <module 'pyopus.evaluator.measure' from '/home/arpadb/pytest/pyopus/evaluator/measure.py'>,

'mosList': ['xmn1', 'xmn2', 'xmn3', 'xmn4', 'xmn5', 'xmp1', 'xmp2', 'xmp3'],

'np': <module 'numpy' from '/usr/local/lib/python3.5/dist-packages/numpy/__init__.py'>,

'param': {'c_out': 8.21e-12,

'cload': 1e-12,

'diff_l': 1.08e-06,

'diff_w': 7.73e-06,

'gu0nmm': 0.0,

'gu0pmm': 0.0,

'gvtnmm': 0.0,

'gvtpmm': 0.0,

'ibias': 0.0001,

'lev1': -0.5,

'lev2': 0.5,

'load_l': 2.57e-06,

'load_w': 3.49e-05,

'mirr_l': 5.63e-07,

'mirr_ld': 5.63e-07,

'mirr_w': 7.46e-05,

'mirr_wd': 7.46e-05,

'mirr_wo': 7.46e-05,

'mn1u0': 0.0,

'mn1vt': 0.0,

'mn2u0': 0.0,

'mn2vt': 0.0,

'mn3u0': 0.0,

'mn3vt': 0.0,

'mn4u0': 0.0,

'mn4vt': 0.0,

'mn5u0': 0.0,

'mn5vt': 0.0,

'mp1u0': 0.0,

'mp1vt': 0.0,

'mp2u0': 0.0,

'mp2vt': 0.0,

'mp3u0': 0.0,

'mp3vt': 0.0,

'out_l': 3.75e-07,

'out_w': 4.8e-05,

'pw': 1e-05,

'r_out': 19.7,

'rfb': 1000000.0,

'rin': 1000000.0,

'rload': 100000000.0,

'temperature': 25,

'tf': 1e-09,

'tr': 1e-09,

'tstart': 1e-05,

'vdd': 1.8},

'result': {'gain': {'nom': 66.24103484725208},

'gain_com': {'nom': 8.276939732033867},

'gain_vdd': {'nom': -26.235135394608754},

'gain_vss': {'nom': 3.776680236411179},

'in1kmn2': {'nom': 1.5814509234426575e-16},

'in1kmn2id': {'nom': 1.5814509234426575e-16},

'in1kmn2rd': {'nom': 0.0},

'inoise1k': {'nom': 3.343611882428561e-14},

'isup': {'nom': 0.0003009768725819436},

'onoise1k': {'nom': 3.3371018439855986e-14},

'out_op': {'nom': 0.00697923487611618},

'overshdn': {'nom': 0.07176477049919334},

'overshup': {'nom': 0.08923807202380866},

'pm': {'nom': 70.04010745060916},

'slewdn': {'nom': 10818342.824614132},

'slewup': {'nom': 10059147.304855734},

'swing': {'nom': 1.474471744100907},

'tsetdn': {'nom': 1.9248788635101697e-07},

'tsetup': {'nom': 2.0939194104629665e-07},

'ugbw': {'nom': 6641299.6248098845},

'vds_drv': {'nom': array([0.58454814, 0.8205568 , 0.433882 , 0.11164392, 0.826805 , 0.55567868, 0.32221448, 0.80172834])},

'vgs_drv': {'nom': array([0.2121875 , 0.20869958, 0.02675759, 0.02674103, 0.02677769, 0.39175785, 0.39175785, 0.10679811])}}}

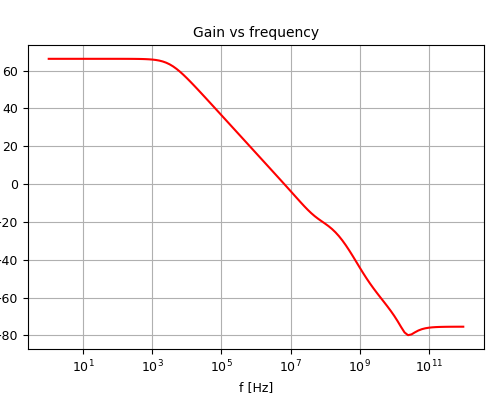

Gain of the initial Miller amplifier in nominal corner.¶

Next, the file holding the waveforms produced by the ac analysis is

loaded. The frequency scale and the gain in dB are extracted and plotted.

The script cleans up all temporary files. Only the collected result files (.pck) remain on disk.

If we start the script on multiple local CPUs with

mpirun -n 5 python3 01-evaluator.py

we can see that the evaluation runs faster because analyses are distributed across multiple CPUs. The part of the output that lists the remotely stored result files changes to

Result files across hosts

{(MPIHostID('calypso'), ('nom', 'ac')): '/tmp/restemp.calypso_7a50_1.nom.ac.r0hhsg4f',

(MPIHostID('calypso'), ('nom', 'accom')): '/tmp/restemp.calypso_7a4e_1.nom.accom.advjsa_v',

(MPIHostID('calypso'), ('nom', 'acvdd')): '/tmp/restemp.calypso_7a4e_1.nom.acvdd.9nz70aco',

(MPIHostID('calypso'), ('nom', 'acvss')): '/tmp/restemp.calypso_7a4f_1.nom.acvss.5k4b2czs',

(None, ('nom', 'blank')): '/tmp/restemp.calypso_7a4d_1.nom.blank.ny6qsqz3',

(MPIHostID('calypso'), ('nom', 'dc')): '/tmp/restemp.calypso_7a50_1.nom.dc.5pe13zzs',

(MPIHostID('calypso'), ('nom', 'noise')): '/tmp/restemp.calypso_7a50_1.nom.noise.d283cnzi',

(MPIHostID('calypso'), ('nom', 'op')): '/tmp/restemp.calypso_7a50_1.nom.op.4lwpkitq',

(MPIHostID('calypso'), ('nom', 'tran')): '/tmp/restemp.calypso_7a50_1.nom.tran.9jl8tkra',

(MPIHostID('calypso'), ('nom', 'translew')): '/tmp/restemp.calypso_7a51_1.nom.translew.x0o3fm8o'}

We can see that only the (‘nom’, None) corner-analysis pair is stored locally because the corresponding performance measures were evaluated on the local machine (the one that invoked the evaluator).